Software Architecture: Difference between revisions

From SpaceElevatorWiki.com

Jump to navigationJump to search

| Line 12: | Line 12: | ||

* Input: from the Vision and world simulator | * Input: from the Vision and world simulator | ||

* Output: high level orders to the car simulator | * Output: high level orders to the car simulator | ||

== [[VisionArch |Vision]] == | == [[VisionArch |Vision]] == | ||

Revision as of 06:24, 6 July 2008

Codebase_Analysis

Multithreading

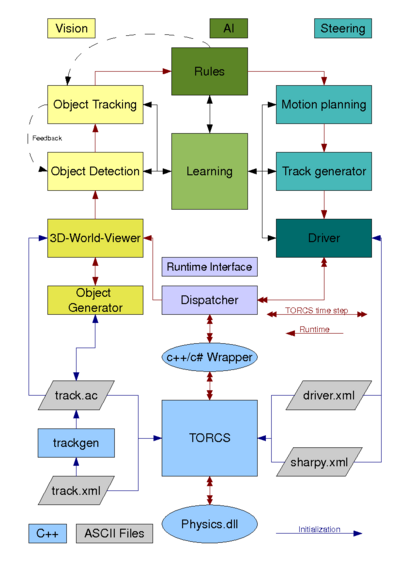

Architecture

Driving

Driving module will use an interface like this of Torcs:

- Input: from the Vision and world simulator

- Output: high level orders to the car simulator

Vision

Vision module I/O:

- Input: screenshot of the world (real or virtual).

- Output: object geometry, etc. and other information necessary to the AI.

World Simulator

World simulator I/O:

- Input: Progression of time, instructions to the car

- Output: visual output for the user, screenshots for vision module, APIs to find out where stuff actually is. A warning when our car has "crashed", which means there is a bug in the vision or driving logic.

Extra Modules

Between C# and TORCS, the dispatcher

The dispatcher splits the information from TORCS and sends it to the relevant parts. The current position and rotation of our car and the opponents (including "parked cars" as obstacles) is send to the world viewer. Informations about our fuel, brake temperature etc. it sends to the AI part (rules, learning) and to the driver. The driver gets informations from the onboard sensors (engines rpm etc.). From the driver it gets back the commands to send back to TORCS. While learning, we also can send information to other parts, to get feedback about the quality of our single steps and to help to work independent.