Software Architecture: Difference between revisions

No edit summary |

No edit summary |

||

| (11 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

[[Image:Dataflow.png|thumb|400 px]] | [[Image:Dataflow.png|thumb|400 px]] | ||

= | = [[Codebase_Analysis]] = | ||

[[Codebase_Analysis]] | |||

= | = [[DrivingScenarios]] = | ||

[[Multithreading]] | = [[Multithreading]] = | ||

= Architecture = | = Architecture = | ||

[[Image:Dataflow.odg]] | [[Image:Dataflow.odg]] | ||

= | == Skeleton of the C#-Part == | ||

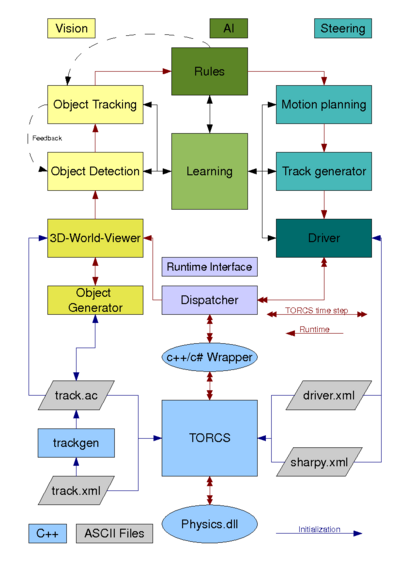

According to the dataflow diagram, the C#-part is installed and later called from the mono runtime which is installed and called from the C++/C#-wrapper (sharpy.dll/so). | |||

In the current basic implementation, the C#-part is an executable (SharpyCS.exe) started from the mono runtime. If the SharpyCS.exe is called directly, it only prints a short description, the version and the release date to the console: | |||

E:\SharpyCS>SharpyCS.exe | |||

C# SharpyCS for TORCS V1.3.0-V1.3.1 | |||

C# Version V 0.00.007 | |||

C# Released 2008-07-07 | |||

At the moment, the C++-Part of the wrapper creates the C#-Dispatcher object when the sharpy.dll is called from TORCS. All other objects (for the driver module) are created from the Dispatcher. | |||

After the initialization is finished TORCS calls the C++-Wrapper's Drive method each timestep (50 times per second). This method prepares the data provided by TORCS for the C#-Dispatcher and calls it. | |||

The Dispacher is used for all drivers/cars. It gets an index, to which driver the call is to send. Before the first driver is called in a timestep, the common used data of all cars is updated. This differs form the way TORCS does it in a pure C++ robot. We want all robots use the same instance of the commen data, so all the common data has to be prepared before the first use by a driver. | |||

The driver is computed as the main thread, because it has to answer in realtime to be able to use TORCS with our C#-Extention still as "game", means you could drive as human driver against our robot. | |||

For the next steps, we have to realize the other modules, from World Viewer over the Detection and Tracking to the AI module, and again over Motion planning back to the driver. | |||

We have to discuss, how to realize the single modules. | |||

To make the internal work of the modules independent from the drivers timestep (and to use the power of our multiprocessor systems) the modules should be based on threads. | |||

The first question is, can/shall a thread create and use it's own window, or should all modules use a common window. If a common window is used, the painting to it has to be done by calls to code from it. Here we had to figure out, how to synchronize it. | |||

If the needed code (classes to use) is threadsafe and we use different windows, the output of the single modules could be done independent, means programing would be more independent too. | |||

For a basic demo, I would like to display a simple window with a bird eye view to the position of the car. What classes to use here? Is there a code snipped how todo with mono? | |||

== [[TORCS_robot_driving| Driving]] == | == [[TORCS_robot_driving| Driving]] == | ||

| Line 17: | Line 43: | ||

* Input: from the Vision and world simulator | * Input: from the Vision and world simulator | ||

* Output: high level orders to the car simulator | * Output: high level orders to the car simulator | ||

== [[VisionArch |Vision]] == | == [[VisionArch |Vision]] == | ||

| Line 34: | Line 49: | ||

* Input: screenshot of the world (real or virtual). | * Input: screenshot of the world (real or virtual). | ||

* Output: object geometry, etc. and other information necessary to the AI. | * Output: object geometry, etc. and other information necessary to the AI. | ||

== [[World Simulator]] == | == [[World Simulator]] == | ||

| Line 51: | Line 55: | ||

* Input: Progression of time, instructions to the car | * Input: Progression of time, instructions to the car | ||

* Output: visual output for the user, screenshots for vision module, APIs to find out where stuff actually is. A warning when our car has "crashed", which means there is a bug in the vision or driving logic. | * Output: visual output for the user, screenshots for vision module, APIs to find out where stuff actually is. A warning when our car has "crashed", which means there is a bug in the vision or driving logic. | ||

== Extra Modules == | |||

=== Between C# and TORCS, the dispatcher === | |||

The dispatcher splits the information from TORCS and sends it to the relevant parts. | |||

The current position and rotation of our car and the opponents (including "parked cars" as obstacles) is send to the world viewer. | |||

Informations about our fuel, brake temperature etc. it sends to the AI part (rules, learning) and to the driver. The driver gets informations from the onboard sensors (engines rpm etc.). | |||

From the driver it gets back the commands to send back to TORCS. | |||

While learning, we also can send information to other parts, to get feedback about the quality of our single steps and to help to work independent. | |||

=== Torcs data structures in C# === | |||

I uploaded Wolf-Dieter's ODS to Google Docs as an experiment. We could also just maintain this text on a set of pages on the wiki, or just in code... What do you think? | |||

{{#widget:Google Spreadsheet | |||

|key=pXewQwBp7lZv8XCodm2NuEQ | |||

|width=1000 | |||

|height=700 | |||

}} | |||

Latest revision as of 08:09, 19 February 2009

Codebase_Analysis

DrivingScenarios

Multithreading

Architecture

Skeleton of the C#-Part

According to the dataflow diagram, the C#-part is installed and later called from the mono runtime which is installed and called from the C++/C#-wrapper (sharpy.dll/so).

In the current basic implementation, the C#-part is an executable (SharpyCS.exe) started from the mono runtime. If the SharpyCS.exe is called directly, it only prints a short description, the version and the release date to the console:

E:\SharpyCS>SharpyCS.exe

C# SharpyCS for TORCS V1.3.0-V1.3.1

C# Version V 0.00.007

C# Released 2008-07-07

At the moment, the C++-Part of the wrapper creates the C#-Dispatcher object when the sharpy.dll is called from TORCS. All other objects (for the driver module) are created from the Dispatcher.

After the initialization is finished TORCS calls the C++-Wrapper's Drive method each timestep (50 times per second). This method prepares the data provided by TORCS for the C#-Dispatcher and calls it.

The Dispacher is used for all drivers/cars. It gets an index, to which driver the call is to send. Before the first driver is called in a timestep, the common used data of all cars is updated. This differs form the way TORCS does it in a pure C++ robot. We want all robots use the same instance of the commen data, so all the common data has to be prepared before the first use by a driver.

The driver is computed as the main thread, because it has to answer in realtime to be able to use TORCS with our C#-Extention still as "game", means you could drive as human driver against our robot.

For the next steps, we have to realize the other modules, from World Viewer over the Detection and Tracking to the AI module, and again over Motion planning back to the driver.

We have to discuss, how to realize the single modules.

To make the internal work of the modules independent from the drivers timestep (and to use the power of our multiprocessor systems) the modules should be based on threads.

The first question is, can/shall a thread create and use it's own window, or should all modules use a common window. If a common window is used, the painting to it has to be done by calls to code from it. Here we had to figure out, how to synchronize it.

If the needed code (classes to use) is threadsafe and we use different windows, the output of the single modules could be done independent, means programing would be more independent too.

For a basic demo, I would like to display a simple window with a bird eye view to the position of the car. What classes to use here? Is there a code snipped how todo with mono?

Driving

Driving module will use an interface like this of Torcs:

- Input: from the Vision and world simulator

- Output: high level orders to the car simulator

Vision

Vision module I/O:

- Input: screenshot of the world (real or virtual).

- Output: object geometry, etc. and other information necessary to the AI.

World Simulator

World simulator I/O:

- Input: Progression of time, instructions to the car

- Output: visual output for the user, screenshots for vision module, APIs to find out where stuff actually is. A warning when our car has "crashed", which means there is a bug in the vision or driving logic.

Extra Modules

Between C# and TORCS, the dispatcher

The dispatcher splits the information from TORCS and sends it to the relevant parts. The current position and rotation of our car and the opponents (including "parked cars" as obstacles) is send to the world viewer. Informations about our fuel, brake temperature etc. it sends to the AI part (rules, learning) and to the driver. The driver gets informations from the onboard sensors (engines rpm etc.). From the driver it gets back the commands to send back to TORCS. While learning, we also can send information to other parts, to get feedback about the quality of our single steps and to help to work independent.

Torcs data structures in C#

I uploaded Wolf-Dieter's ODS to Google Docs as an experiment. We could also just maintain this text on a set of pages on the wiki, or just in code... What do you think?

{{#widget:Google Spreadsheet |key=pXewQwBp7lZv8XCodm2NuEQ |width=1000 |height=700 }}